=Overview

We present Temporal and Object Quantification Networks (TOQ-Nets), a new class of neuro-symbolic networks with a structural bias that enables them to learn to recognize complex relational-temporal events.

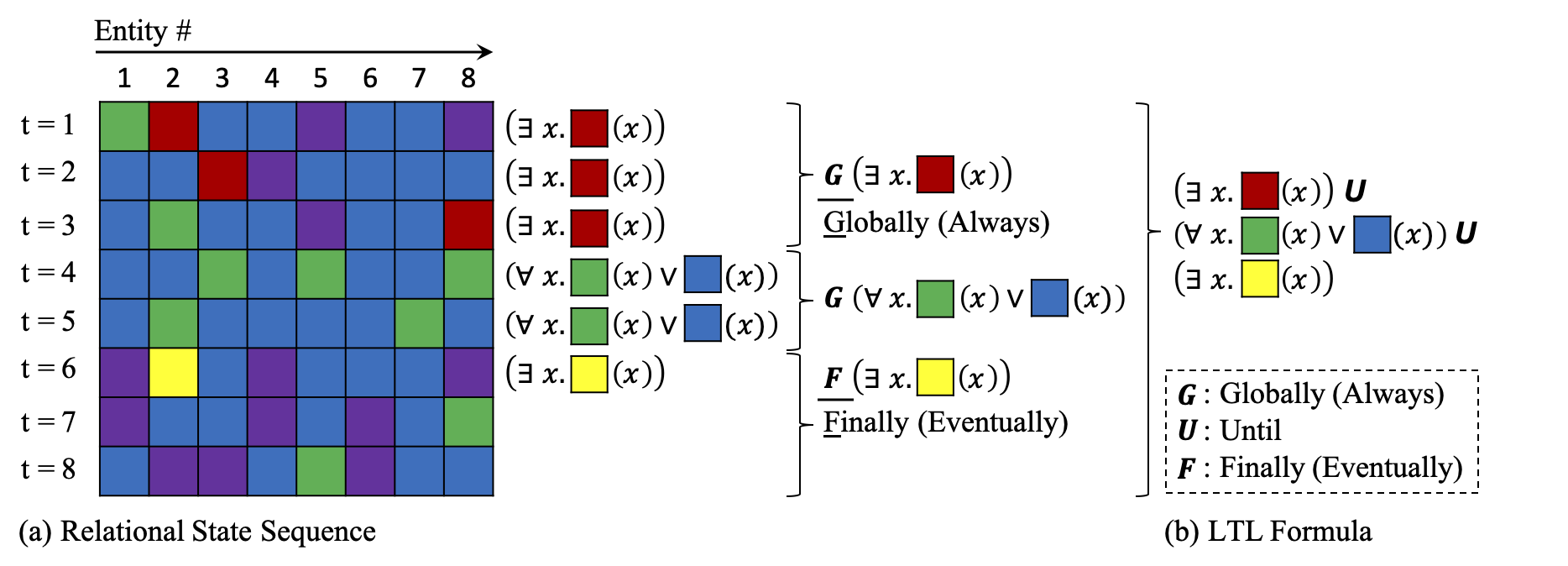

Figure 1: (a) An input sequence composed of relational states: each column represents the state of an entity that changes over time. A logic formula describes a complex concept or feature that is true of this temporal sequence using object and temporal quantification. The sequence is segmented into three stages: throughout the first stage, ■ holds for at least one entity, until the second stage, in which each entity is always either ■ or ■, until the third stage, in which eventually ■ becomes true for at least one of the entities. (b) Such events can be described using first-order linear temporal logic expressions. Our model can learn neuro-symbolic recognition models for such relational-temporal events from data.

Our model includes reasoning layers that implement finite-domain quantification over objects and time. The structure allows them to generalize directly to input instances with varying numbers of objects in temporal sequences of varying lengths. We evaluate TOQ-Nets on input domains that require recognizing event-types in terms of complex temporal relational patterns. We demonstrate that TOQ-Nets can generalize from small amounts of data to scenarios containing more objects than were present during training and to temporal warpings of input sequences.

=Model

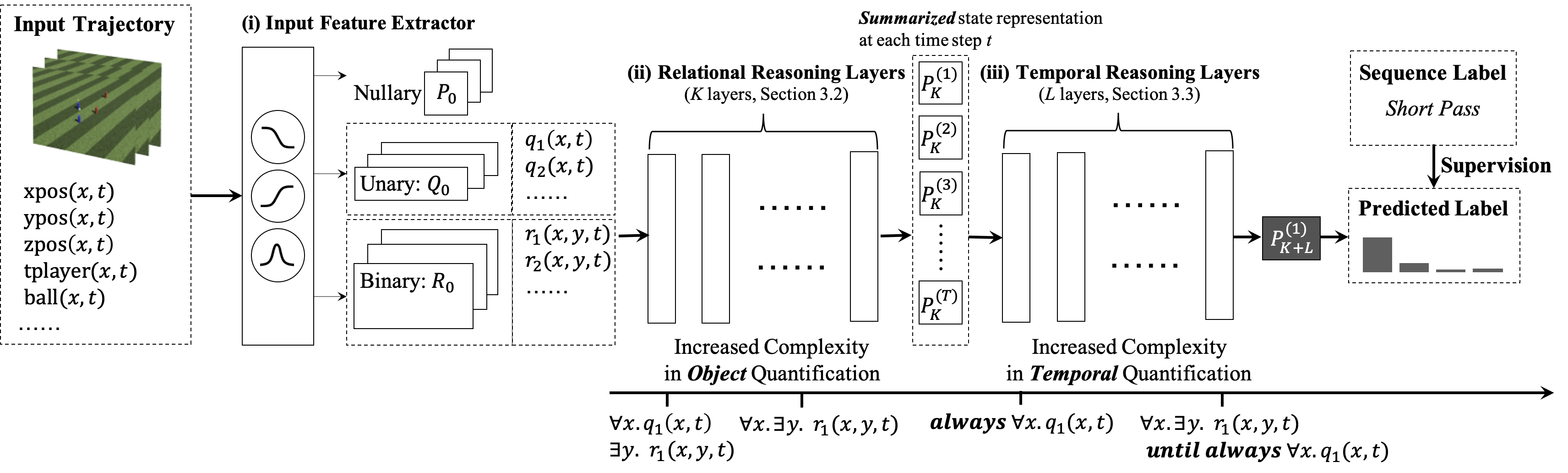

Figure 2: A TOQ-Net contains three modules: (i) an input feature extractor, (ii) relational reasoning layers, and (iii) temporal reasoning layers. To illustrate the model's representational power, we show that logical forms of increasing complexity can be realized by stacking multiple layers.

- Input Representation

- The input to a TOQ-Net is a tensor representation of the properties of all entities at each moment in time.

- Input Feature Extractor

- The first layer of a TOQ-Net extracts temporal features for each entity with an input feature extractor that focuses on entity features within a fixed and local time window.

- Relational Reasoning Layers

- Second, these temporal-relational features go through several relational reasoning layers, each of which performs linear transformations, sigmoid activation, and object quantification operations. The linear and sigmoid functions allow the network to realize learned Boolean logical functions, and the object quantification operators can realize quantifiers.

- Temporal Reasoning Layers

- The relational reasoning layers perform a final quantification, computing for each time step a set of a nullary features that are passed to the temporal reasoning layers. Each temporal reasoning layer performs linear transformations, sigmoid activation, and temporal quantification, allowing the model to realize a subset of linear temporal logic.

=Resources

- Temporal and Object Quantification Networks in [PyTorch].

- The soccer event dataset generated by the Google Football simulator: [Zip File].

- The robotic manipulation dataset generated by the RLBench simulator: [Zip File].